Tuesday, November 29, 2011

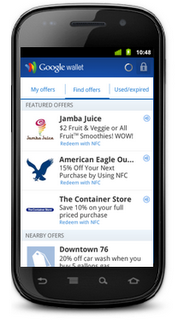

The Offers tab in Google Wallet

We’re hearing from people at check-out counters throughout the country that paying with your phone is a little like magic. Just look at the ecstatic reaction on the faces of our friends who made their first Google Wallet purchases last Thursday.

Today, our partners American Eagle Outfitters, The Container Store, Foot Locker, Guess, Jamba Juice, Macy’s, OfficeMax and Toys“R”Us are rolling out an even better Google Wallet experience. For the first time ever in the U.S., at these select stores, you can not only pay but also redeem coupons and/or earn rewards points—all with a single tap of your phone. This is what we call the Google Wallet SingleTap experience.

With Google Wallet in hand, you can walk into a Jamba Juice, American Eagle Outfitters or any other partner store. Once you’ve ordered that Razzmatazz smoothie or found the right color Slim Jean, head straight to the cashier and tap your phone to pay and save—that’s it. You don’t have to shuffle around to find the right coupon to scan or rewards card to stamp because it all happens in the blink of an eye.

The Offers tab in Google Wallet has been updated to include a new "Featured Offers" section with discounts that are exclusive to Google Wallet. Today, these include 15% off at American Eagle Outfitters, 10% off at The Container Store, 15% off at Macy’s and an all-fruit smoothie for $2 at Jamba Juice. There are many more Google Wallet exclusive discounts to come, and you can save your favorites in Google Wallet so they’ll be automatically applied to your bill when you check out.

Organizing loyalty cards in your wallet is getting easier too. Today, Foot Locker, Guess, OfficeMax and American Eagle Outfitters are providing loyalty cards for Google Wallet so you can rack up reward points automatically as you shop. More of these are on the way.

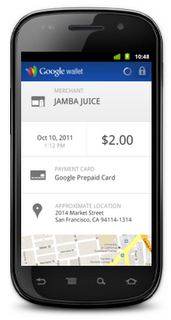

One more thing—in response to user feedback, we’ve improved transaction details for the Google Prepaid Card with real-time transaction information including merchant name, location, dollar value and time of each transaction. Here’s what it looks like:

Finally, a special thanks to Chevron, D’Agostino, Faber News Now, Gristedes Supermarkets and Pinkberry who are now also working to equip their stores to accept Google Wallet.

It’s still early days for Google Wallet, but this is an important step in expanding the ecosystem of participating merchants to make shopping faster and easier in more places. If you’re a merchant and want to work with us to make shopping easier for your customers and connect with them in new ways, please sign up on the Google Wallet site. And if you’re a shopper and want to purchase a Nexus S 4G phone from Sprint with Google Wallet, visit this page.

Cross-posted on the Official Google Blog

Monday, November 28, 2011

Google Starting to Integrate Google Plus Pages with Places

The ever observant Plamen and his friend Stacy sent along a Places Search screenshot that clearly shows a Google Plus Page icon next to a Places search result:

When you click on the icon it shows a Google Plus Page for a different Transmission service.

It makes sense that Google would integrate Google Plus pages with their Local results. What better way to both allow a business to highlight the Page’s presence and incent other businesses to claim their Google Plus Page and keep it updated with meaningful information.

I assume that this is test but it is an interesting one.

(click to view larger)

————————

————————

Here is the syntax of the url:

http://www.google.com/search?q=transmission+servicing+lexington+ky&hl=en&safe=off

&client=safari&rls=en&prmd=imvns&source=lnms&tbm=plcs

&ei=-PbMTtWiDeXK0AHni8D6Dw

&sa=X&oi=mode_link&ct=mode&cd=8&ved=0CF0Q_AUoBw&prmdo=1

&bav=on.2,or.r_gc.r_pw.r_cp.,cf.osb&biw=1435&bih=721

&emsg=NCSR&noj=1&ei=CvnMTrGUH4Pz0gGZ5DQ

————————

Here is the syntax of the url:

http://www.google.com/search?q=transmission+servicing+lexington+ky&hl=en&safe=off

&client=safari&rls=en&prmd=imvns&source=lnms&tbm=plcs

&ei=-PbMTtWiDeXK0AHni8D6Dw

&sa=X&oi=mode_link&ct=mode&cd=8&ved=0CF0Q_AUoBw&prmdo=1

&bav=on.2,or.r_gc.r_pw.r_cp.,cf.osb&biw=1435&bih=721

&emsg=NCSR&noj=1&ei=CvnMTrGUH4Pz0gGZ5DQ

————————

Here is the syntax of the url:

http://www.google.com/search?q=transmission+servicing+lexington+ky&hl=en&safe=off

&client=safari&rls=en&prmd=imvns&source=lnms&tbm=plcs

&ei=-PbMTtWiDeXK0AHni8D6Dw

&sa=X&oi=mode_link&ct=mode&cd=8&ved=0CF0Q_AUoBw&prmdo=1

&bav=on.2,or.r_gc.r_pw.r_cp.,cf.osb&biw=1435&bih=721

&emsg=NCSR&noj=1&ei=CvnMTrGUH4Pz0gGZ5DQ

————————

Here is the syntax of the url:

http://www.google.com/search?q=transmission+servicing+lexington+ky&hl=en&safe=off

&client=safari&rls=en&prmd=imvns&source=lnms&tbm=plcs

&ei=-PbMTtWiDeXK0AHni8D6Dw

&sa=X&oi=mode_link&ct=mode&cd=8&ved=0CF0Q_AUoBw&prmdo=1

&bav=on.2,or.r_gc.r_pw.r_cp.,cf.osb&biw=1435&bih=721

&emsg=NCSR&noj=1&ei=CvnMTrGUH4Pz0gGZ5DQ

Examples Of Google Plus Integrated Into Local Search

Several additional instances of Google Plus integration into local search results have been found by Renan Cesar, a Brazilian search marketer. It has also been brought to my attention by Sebastian Socha that examples are now visible in Germany.

In both of these examples, the images, when clicked, go to an intermediate search result highlighting the appropriate Plus Page with an option to add them to your circle. In the first instance the intermediary result shows a recent post. In both cases the Plus page is correctly identified. The intermediate results page that you are taken to offers a somewhat awkward, beta like experience as it is neither a Plus page or a true search result.

At this point, Plus results for local are only showing in the Places Search, one click away from the main search results and thus less visible then they might be. If these results were to show in the main results, the opportunity to enhance a business listing with a large, juicy logo or image would be irresistible. As it is, this current rollout might convince some additional businesses to try Plus. It certainly seems to be pointing to much more visible exposure of Plus Business Pages.

My recommendation? Claim your Business Place Page to avoid squatting (which is all too easy at the moment), associate it with your website and minimally add a few compelling logos and photos.

On this Places Search example for Cake Box New Jersey you see a correctly integrated profile photo on all of their results from the business’s Google Plus page. The image clicks thru to an intermediary search result of a recent post on their Plus Page:

In second example the Plus page for Manta has been integrated into the organic section of Places search for NEC Store Manhattan.

In second example the Plus page for Manta has been integrated into the organic section of Places search for NEC Store Manhattan.

In second example the Plus page for Manta has been integrated into the organic section of Places search for NEC Store Manhattan.

In second example the Plus page for Manta has been integrated into the organic section of Places search for NEC Store Manhattan.

Friday, November 25, 2011

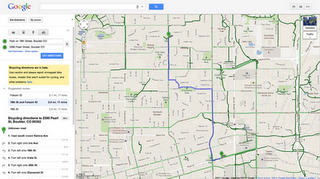

Google Maps and the green route

We’ve learned that most people who use Google Maps just want to get from Point A to Point B -- as quickly and painlessly as possible. Whether it’s planning a weekend bike route, finding the quickest roads during rush hour, or identifying bus paths with the fewest transfers, people are making the most out of Maps features to travel faster and greener. Here’s how you can use Google Maps to minimize your impact on the environment.

Commuting by bike

As an avid cyclist, I feel very fortunate to live in Boulder, Colorado and be surrounded by over 300 miles of bike lanes, routes and paths. When the weather is cooperating, I try to commute to work every day. It’s a great way to stay healthy and arrive at the office feeling awake! According to Biking Directions in Google Maps, it takes me 10 minutes to travel 2 miles from Point A (my house) to Point B (the office). It also shows three alternative routes, and I can check the weather in Google Maps to see if I’ll need a rain jacket for the ride home. I can also use Biking Directions on my mobile phone, which came in handy for me during a biking trip in Austin when I got lost on a major highway and needed a safe route back to the hotel.

Did you know that Google Maps has biking directions available in over 200 US cities and in 9 Canadian regions? A collaboration with Rails to Trails, an organization that converts old railroad tracks into bike paths, has made information about over 12,000 miles of bike paths available to Google Maps users.

Taking public transit

Millions of people use Google Transit every week, viewing public transit routes on both Google Maps and Google Maps for mobile in over 470 cities around the world. You can take a train from Kyoto to Osaka or find your way around London on the Tube. In some places, you can compare the cost of taking public transit and driving by viewing the calculation below the list of directions (like in this example). Feel free to customize your routes and departure and arrival times under “Show options” to minimize walking or limit the number of transfers. You’ll know if your bus is late by checking out live transit updates.

Being green in the car

According to the 2011 Urban Mobility Report by Texas Transportation Institute at Texas A&M University, motorists wasted 1.9 billion gallons of fuel last year in the US because of traffic congestion, costing the average commuter an additional $713 in commuting costs. You can save time and money by clicking on the Traffic layer in the top right corner to view real-time traffic conditions on your route, and then drag and adjust your route to green. If you need directions in advance, save paper by sending your directions directly to your car, GPS, or phone. The Send-to-Car feature is available to more than 20 car brands worldwide, and the Send-to-GPS feature is available to more than 10 GPS brands. And for those of you who drive electric vehicles, you can search for electric vehicle charging stations by typing “ev charging station in [your city]” and recharge.

As you can see, Google Maps is loaded with features to help you save time, save money, and get where you need to be -- all while minimizing your commute’s impact on the environment.

Wednesday, November 23, 2011

The European Google Trailblazer Awards

Last week in our Zurich office, we held a celebratory event for the 20 winners of the second annual European Google Trailblazer Awards, intended to recognize students that exhibit great potential in science and engineering. The eight girls and 12 boys aged 16-19 were selected for their work in national science, informatics and engineering competitions that took place in Germany, Hungary, Ireland, Romania, Switzerland and the U.K. over the past year. Partnering with each of these competitions, Google engineers awarded “Trailblazer” status to the participants who demonstrated an outstanding use of computing technology in their projects. The aim of the distinction is to reward and encourage these students’ achievements, bring talented students to experience life at Google and show them what a career in computer science can look like, with a special emphasis on how computer science touches every discipline.

Every Trailblazer winner this year was truly worthy of the title. Ciara, Ruth and Kate, three of the winners of the the BT Young Scientist competition in Ireland, taught themselves to code in order to develop a mobile app for teens to measure their carbon footprints, looking at their use of typical teenage appliances like MP3 players, hair straighteners and computer games. Joszef, one of the winners of the Scientific and Innovation Contest for Youth in Hungary, developed a portable heart monitor combined with GPS that would alert medical services instantly if you were having a heart attack, and include your location so they could respond quickly. Tom and Yannick, winners of the Junior Web Awards in Switzerland, learned HTML and CSS in order to build an interactive health website—and made it available in French, German and English. These are just a few examples.

While at Google Zurich, the Trailblazers covered a wide swath of material, learning about data centers, security and testing, hearing from the Street View team on managing operations in multiple countries and from recruiters on how to write a strong resume. Google engineers chatted about careers in computer science and then tasked the group to solve problems like a software engineer: imitating how a software program might work, the participants lined up in groups of six and had to create an algorithm to reorder themselves without speaking to each other during the re-arranging. For their most in-depth challenge, the students developed and pitched their own award-winning product with guidance from product managers. In just 20 minutes, each student had to come up with product ideas and a pitch—delivered to the product managers—that would convince even Larry Page that their tech product would be the next big thing.

The students left Zurich buzzing about the pathways a career in tech can lead them down, and we can’t wait to see how these young entrepreneurs develop over the next few years.

If you’d like a shot at becoming a Google Trailblazer in 2012, enter one of our partner competitions in Germany, Hungary, Ireland, Romania, Switzerland or the U.K. (you need to be at high school in one of these countries to be eligible for entry). More countries and partner competitions will be added each year, so keep an eye on google.com/edu for further details.

If you’re the organizer of an pre-existing national science and engineering competition in Europe, the Middle East or Africa (EMEA) and would like your competition to be considered for a Trailblazer prize from Google, please complete this form.

Afghanistan through maps using Google Map Maker

Striking for its charm, bustle and impressive historic architectural treasures, the silk road city of Herat, Afghanistan has until today, been nearly ‘invisible’ online in digital maps. Luckily, Herat is home to Herat University, which is filled with talented, entrepreneurial students some of whom have worked together to literally put their town “on the (digital) map.”

In response to their city’s absence on Google Maps and Google Earth, a team of students launched a grassroots volunteer effort, named the Afghan Map Makers, to map their country using Google Map Maker, with the vision of reintroducing Afghanistan to its people and the world through its maps. As a result of their efforts, we are proud to re-launch the map of Afghanistan, with a far more detailed Herat --- along with 11 more regions, on Google Maps.

Herat, Afghanistan: The “before and after” efforts of volunteer mappers from Microsis, Afghan Citadel Software Company, Afghan Cybersoft, Asia Cyber Tech, Herat4soo.com and Taktech.

Represented by Hasen Poreya, the Afghan Map Makers presented their project during a June visit to the Mountain View and New York Google offices. According to Hasen, the group encountered many roadblocks from the very beginning; everything from connectivity issues to old satellite imagery and an initial lack of volunteers. Since then, they’ve laid out the map of Herat and several volunteers have already mapped most of Kabul, Afghanistan. You can watch a mapping time lapse of Herat here and Kabul here.

This story is far more significant than the mapping of a city. It is a story of young people in a community coming together, taking ownership of their future and serving their country in a truly entrepreneurial and meaningful way. Congratulations to Hasen, Sadeq, the Herat start-up teams and thousands of Map Makers in more than 180 other countries, helping to map the world.

Herat, Afghanistan: The “before and after” efforts of volunteer mappers from Microsis, Afghan Citadel Software Company, Afghan Cybersoft, Asia Cyber Tech, Herat4soo.com and Taktech.

Represented by Hasen Poreya, the Afghan Map Makers presented their project during a June visit to the Mountain View and New York Google offices. According to Hasen, the group encountered many roadblocks from the very beginning; everything from connectivity issues to old satellite imagery and an initial lack of volunteers. Since then, they’ve laid out the map of Herat and several volunteers have already mapped most of Kabul, Afghanistan. You can watch a mapping time lapse of Herat here and Kabul here.

This story is far more significant than the mapping of a city. It is a story of young people in a community coming together, taking ownership of their future and serving their country in a truly entrepreneurial and meaningful way. Congratulations to Hasen, Sadeq, the Herat start-up teams and thousands of Map Makers in more than 180 other countries, helping to map the world.

The Afghan Map Maker Team hard at work, mapping Herat, Afghanistan

Led by recent computer science graduates Hasen and Sadeq, along with other volunteers from their tech start-up, Microcis IT Services CO, the Afghan Map Makers realized how mapping could improve Afghanistan’s economic, community and civic development. Starting from old paper maps and basic satellite imagery, the Afghan Map Makers employed unmatched strategy and diligence to map Herat in only a matter of weeks.

Shortly thereafter a new tech start-up incubator, the Herat Information Technology Incubator Program for Herat University entrepreneurs, was founded with the help of Department of Defense, giving five more start-up companies the resources to volunteer spare time to the mapping initiative. The group divided Herat’s map into six equal parts, and each set of volunteers was responsible for mapping a particular section of the city.

Herat, Afghanistan: The “before and after” efforts of volunteer mappers from Microsis, Afghan Citadel Software Company, Afghan Cybersoft, Asia Cyber Tech, Herat4soo.com and Taktech.

Represented by Hasen Poreya, the Afghan Map Makers presented their project during a June visit to the Mountain View and New York Google offices. According to Hasen, the group encountered many roadblocks from the very beginning; everything from connectivity issues to old satellite imagery and an initial lack of volunteers. Since then, they’ve laid out the map of Herat and several volunteers have already mapped most of Kabul, Afghanistan. You can watch a mapping time lapse of Herat here and Kabul here.

This story is far more significant than the mapping of a city. It is a story of young people in a community coming together, taking ownership of their future and serving their country in a truly entrepreneurial and meaningful way. Congratulations to Hasen, Sadeq, the Herat start-up teams and thousands of Map Makers in more than 180 other countries, helping to map the world.

Herat, Afghanistan: The “before and after” efforts of volunteer mappers from Microsis, Afghan Citadel Software Company, Afghan Cybersoft, Asia Cyber Tech, Herat4soo.com and Taktech.

Represented by Hasen Poreya, the Afghan Map Makers presented their project during a June visit to the Mountain View and New York Google offices. According to Hasen, the group encountered many roadblocks from the very beginning; everything from connectivity issues to old satellite imagery and an initial lack of volunteers. Since then, they’ve laid out the map of Herat and several volunteers have already mapped most of Kabul, Afghanistan. You can watch a mapping time lapse of Herat here and Kabul here.

This story is far more significant than the mapping of a city. It is a story of young people in a community coming together, taking ownership of their future and serving their country in a truly entrepreneurial and meaningful way. Congratulations to Hasen, Sadeq, the Herat start-up teams and thousands of Map Makers in more than 180 other countries, helping to map the world.

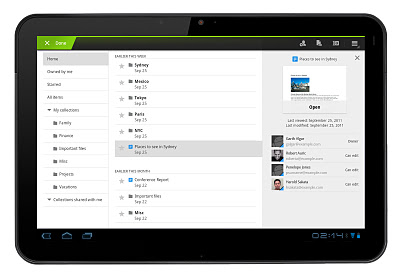

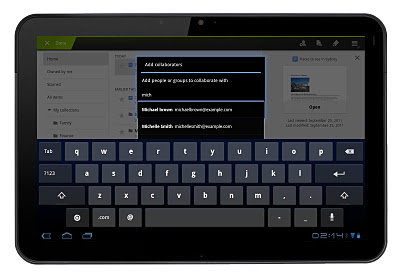

Google Docs experience on Android tablet

Earlier this year, we introduced the Google Docs app for Android. Since then, many users have downloaded the app and enjoyed the benefits of being able to access, edit and share docs on the go.

Today’s update to the app makes Google Docs work better than ever on your tablet. With an entirely new design, we’ve customized the look to make the most of the larger screen space on tablets. The layout includes a three-panel view, which allows you to navigate through filters and collections, view your document list, and see document details, all at once.

Looking at the details panel on the right side, you can see a thumbnail to preview a document and its details before opening it. From the panel, you can see who can view or edit any doc.

These features are now available in 46 languages on tablet devices with Android 3.0+ (Honeycomb) and above.

You can download the app from the Android Market and let us know what you think in the comments or by posting on the forum. Learn more by visiting the help center.

New 3-panel view for improved browsing

Saturday, November 19, 2011

Google Summer of Code students and HelenOS

This year HelenOS, an operating system based on a multiserver microkernel design originating from Charles University, Prague, had the privilege of becoming a mentoring organization in the Google Summer of Code program. Our preparation began a couple of months before mentoring organizations started submitting their applications, but the real fun hit us when we were accepted as a mentoring organization and when the students started sending us their project proposals.

We put together about a dozen ideas for student projects and received about twenty or so official proposals. HelenOS had three mentors and we were thrilled to be given three student slots for our first year in the program. During the student application period we received a lot of patches from candidate students determined to prove their motivation and ability to work with us. It was no coincidence that the three students we chose submitted some of the most interesting patches and did the most thorough research. Two students sent us enough material to seriously attack the first milestones of their respective projects and the third student fixed several bugs, one of them on the MIPS architecture.

The three selected students were Petr Koupy, Oleg Romanenko and Jiri Zarevucky. Petr and Jiri worked on related projects focused on delivering parts of the C compiler toolchain to HelenOS while also improving our C library and improving compatibility with C and POSIX standards. Oleg chose to further extend our FAT file system server by implementing support for FAT12, FAT32, long file name extension and initial support for the exFAT file system.

When the community bonding period began and we started regular communication with our students, all three mentors, Martin Decky, Jiri Svoboda and Jakub Jermar, noticed an interesting phenomenon. Our students seemed a little shy at first, preferring one-on-one communication with their mentor, as opposed to more open communication on the mailing list. This bore some signs of the students expecting the same kind of interaction a student receives while working on a school project with a single supervisor and evaluator. Even though not entirely unexpected, this was not exactly how we intended for the students to communicate with the HelenOS team but we eventually managed to change this trend.

In short, all three of our students were pretty much technically trouble-free and met their mid-term and final milestones securely. To our relief, this was thanks to the students’ skills rather than projects being too easy. Two of our students were geographically close to their mentors so Jiri and Petr attended one or two regular HelenOS onsite project meetings held in Prague.

Besides the regular monthly team meetings, the HelenOS developers met for an annual coding week event called HelenOS Camp. This year the camp took place during the last coding week of the program and we were excited that one of our Google Summer of Code students, Petr Koupy, was able to join us for the event and hack with the rest of the HelenOS team.

Shortly after Google Summer of Code ended we merged contributions of all three students to our development branch, making their work part of the future HelenOS 0.5.0 release.

Below is a screencast showing HelenOS in its brand new role of a development platform, courtesy of Petr Koupy.

This year HelenOS, an operating system based on a multiserver microkernel design originating from Charles University, Prague, had the privilege of becoming a mentoring organization in the Google Summer of Code program. Our preparation began a couple of months before mentoring organizations started submitting their applications, but the real fun hit us when we were accepted as a mentoring organization and when the students started sending us their project proposals.

We put together about a dozen ideas for student projects and received about twenty or so official proposals. HelenOS had three mentors and we were thrilled to be given three student slots for our first year in the program. During the student application period we received a lot of patches from candidate students determined to prove their motivation and ability to work with us. It was no coincidence that the three students we chose submitted some of the most interesting patches and did the most thorough research. Two students sent us enough material to seriously attack the first milestones of their respective projects and the third student fixed several bugs, one of them on the MIPS architecture.

The three selected students were Petr Koupy, Oleg Romanenko and Jiri Zarevucky. Petr and Jiri worked on related projects focused on delivering parts of the C compiler toolchain to HelenOS while also improving our C library and improving compatibility with C and POSIX standards. Oleg chose to further extend our FAT file system server by implementing support for FAT12, FAT32, long file name extension and initial support for the exFAT file system.

When the community bonding period began and we started regular communication with our students, all three mentors, Martin Decky, Jiri Svoboda and Jakub Jermar, noticed an interesting phenomenon. Our students seemed a little shy at first, preferring one-on-one communication with their mentor, as opposed to more open communication on the mailing list. This bore some signs of the students expecting the same kind of interaction a student receives while working on a school project with a single supervisor and evaluator. Even though not entirely unexpected, this was not exactly how we intended for the students to communicate with the HelenOS team but we eventually managed to change this trend.

In short, all three of our students were pretty much technically trouble-free and met their mid-term and final milestones securely. To our relief, this was thanks to the students’ skills rather than projects being too easy. Two of our students were geographically close to their mentors so Jiri and Petr attended one or two regular HelenOS onsite project meetings held in Prague.

Besides the regular monthly team meetings, the HelenOS developers met for an annual coding week event called HelenOS Camp. This year the camp took place during the last coding week of the program and we were excited that one of our Google Summer of Code students, Petr Koupy, was able to join us for the event and hack with the rest of the HelenOS team.

Shortly after Google Summer of Code ended we merged contributions of all three students to our development branch, making their work part of the future HelenOS 0.5.0 release.

Below is a screencast showing HelenOS in its brand new role of a development platform, courtesy of Petr Koupy.

Friday, November 18, 2011

The AdWords API: Changes to accounts identification

The AdWords API allows you to manage a large number of accounts using a single login credential set. In order to determine which account to run a query against, a customer identifier is required.

Previously we allowed the use of either client email address or client customer ID as account identifier. Using email addresses does not handle a number of situations, though, such as address change, multiple accounts associated with one email and others. In addition, AdWords accounts created with no email address are just not accessible by this identifier.

In order to unify account identification we decided to remove support for email addresses as account identifiers starting with v201109. From this version onward only client customer IDs are supported by the AdWords API.

Migrating to client customer ID

Most applications managing more than one AdWords account hold account identifiers in some form of a local storage. In case your application uses client email addresses as identifiers, you will need to convert those to client customer IDs, or add an extra field for the IDs.

In order to make this migration smoother, we’ve provided an example that demonstrates how to obtain a client ID for a given email address. This example is included in every client library and also available online.

It is easy to change how you identify a client account when making API requests if you use our client libraries. Depending on the language you use, you will need to update the configuration file or constructor parameter to include clientCustomerId instead of clientEmail. In case you construct your queries manually, please refer to the SOAP headers documentation page for more details.

We would like to remind you that all existing versions that support identification by email addresses are scheduled for shutdown in Feb 2012.

Thursday, November 17, 2011

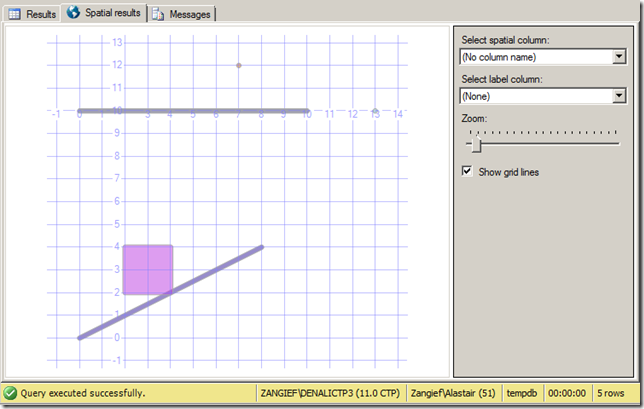

How to Transfer Spatial Data From SQL Server to ESRI Shapefile

There’s plenty of information on the ‘net describing how to import spatial data from ESRI shapefiles into SQL Server . However, what I haven’t seen any examples of yet is how to do the reverse: taking geometry or geography data from SQL Server and dumping it into an ESRI shapefile.

There’s plenty of reasons why you might want to do this – despite its age and relative limitations (such as the maximum filesize per file, limited fieldname length, the fact that each file can contain only a single homogenous type of geometry, etc…), the ESRI shapefile format is still largely the industry standard and pretty much universally read by all spatial applications. Recently, I needed to export some data from SQL Server to shapefile format so that it could be rendered as a map in mapnik, for example (which, sadly, still can’t directly connect to a MS SQL Server database).

So, here’s a step-by-step guide, including the pitfalls to avoid along the way.

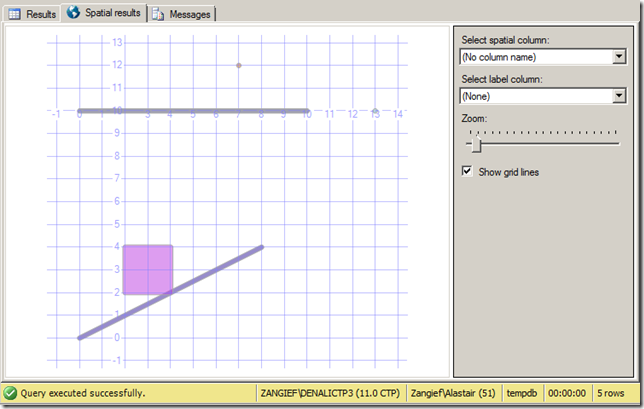

Here’s what the contents of this table looks like in the SSMS Spatial Results tab:

This will fail with a couple of errors, but the first one to address is as follows:

ERROR 1: Attempt to write non-point (LINESTRING) geometry to point shapefile.

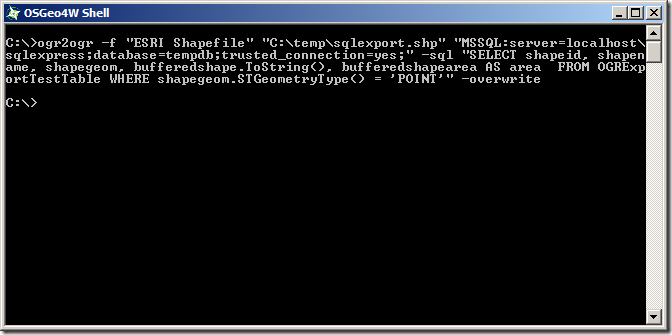

SQL Server will allow you to mix different types of geometry (Points, LineStrings, Polygons) within a single column of geometry or geography data. An ESRI shapefile, in contrast, can only contain a single homogenous type of geometry. To correct this, rather than trying to dump the entire table by specifying the tables=OGRExportTable in the connection string, we’ll have to manually select rows of only a certain type of geometry at a time by specifying an explicit SQL statement. Let’s start off by concentrating on the points only. This can be done with the –sql option, as follows:

This time, a new error occurs:

ERROR 1: C:tempsqlexport.shp is not a directory.

ESRI Shapefile driver failed to create C:tempsqlexport.shp

Although our first attempt to export the entire table failed, it still created an output file at C:tempsqlexport.shp. Seeing as we didn’t specify the behaviour for what to do when the output file already exists, when we run OGR2OGR for the second time it has now errored. To correct this, we’ll add the –overwrite flag.

Running again and the error is now:

ERROR 6: Can’t create fields of type Binary on shapefile layers.

Ok, so remember that our original shapefile had two geometry columns. When you create a shapefile, one column is used to populate the shape information itself, while every other column becomes an attribute of that shape, stored in the associated .dbf file. Since the remaining geometry column is a binary value, this can’t be stored in the .dbf file. To resolve this, rather than using a SELECT *, we’ll explicitly specify each column to be included in the shapefile. You could, if you want, then omit the second geometry column completely from the list of selected fields. Instead, I’ll use the ToString() method to convert it to WKT, which can then be stored as an attribute value:

This should now run without errors, although you’ll still get a warning:

Warning 6: Normalized/laundered field name: ‘bufferedshapearea’ to bufferedsh

The names of any attribute fields associated with a shapefile can only be up to a maximum of 10 characters in length. In this case, OGR2OGR has manually truncated the name of the buferedshapearea column for us, but you might not like to use the garbled “bufferedsh” as an attribute name in your shapefile. A better approach would be to specify an alias for long column names in the –sql statement itself. In this case, perhaps just “area” will suffice:

And now, for the first time, you should be both error and warning free:

This will fail with a couple of errors, but the first one to address is as follows:

ERROR 1: Attempt to write non-point (LINESTRING) geometry to point shapefile.

SQL Server will allow you to mix different types of geometry (Points, LineStrings, Polygons) within a single column of geometry or geography data. An ESRI shapefile, in contrast, can only contain a single homogenous type of geometry. To correct this, rather than trying to dump the entire table by specifying the tables=OGRExportTable in the connection string, we’ll have to manually select rows of only a certain type of geometry at a time by specifying an explicit SQL statement. Let’s start off by concentrating on the points only. This can be done with the –sql option, as follows:

This time, a new error occurs:

ERROR 1: C:tempsqlexport.shp is not a directory.

ESRI Shapefile driver failed to create C:tempsqlexport.shp

Although our first attempt to export the entire table failed, it still created an output file at C:tempsqlexport.shp. Seeing as we didn’t specify the behaviour for what to do when the output file already exists, when we run OGR2OGR for the second time it has now errored. To correct this, we’ll add the –overwrite flag.

Running again and the error is now:

ERROR 6: Can’t create fields of type Binary on shapefile layers.

Ok, so remember that our original shapefile had two geometry columns. When you create a shapefile, one column is used to populate the shape information itself, while every other column becomes an attribute of that shape, stored in the associated .dbf file. Since the remaining geometry column is a binary value, this can’t be stored in the .dbf file. To resolve this, rather than using a SELECT *, we’ll explicitly specify each column to be included in the shapefile. You could, if you want, then omit the second geometry column completely from the list of selected fields. Instead, I’ll use the ToString() method to convert it to WKT, which can then be stored as an attribute value:

This should now run without errors, although you’ll still get a warning:

Warning 6: Normalized/laundered field name: ‘bufferedshapearea’ to bufferedsh

The names of any attribute fields associated with a shapefile can only be up to a maximum of 10 characters in length. In this case, OGR2OGR has manually truncated the name of the buferedshapearea column for us, but you might not like to use the garbled “bufferedsh” as an attribute name in your shapefile. A better approach would be to specify an alias for long column names in the –sql statement itself. In this case, perhaps just “area” will suffice:

And now, for the first time, you should be both error and warning free:

So, now, just repeat the above but substituting the POINT, LINESTRING, and POLYGON geometry types, and creating three separate corresponding shapefiles:

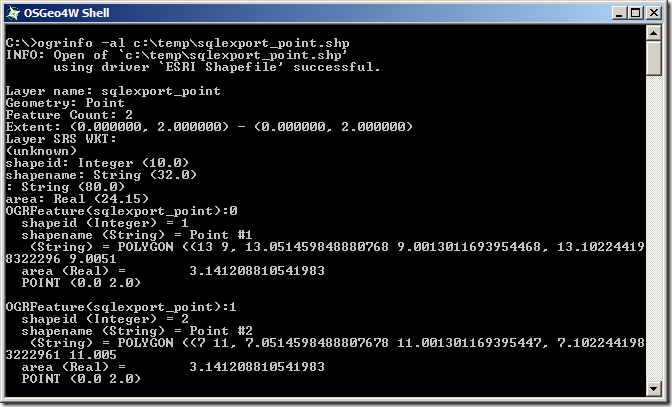

All done, right? Let’s just have a quick check on the created files to make sure they look ok. You can do this using ogrinfo with the –al option, which will give you a summary of the elements contained in any spatial data set. I mean, I’m sure they’re fine and everything, but…. hang on a minute:

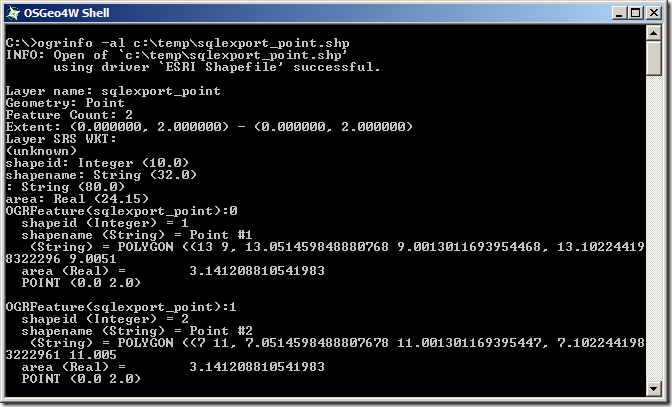

Here’s the POINT shapefile:

So, now, just repeat the above but substituting the POINT, LINESTRING, and POLYGON geometry types, and creating three separate corresponding shapefiles:

All done, right? Let’s just have a quick check on the created files to make sure they look ok. You can do this using ogrinfo with the –al option, which will give you a summary of the elements contained in any spatial data set. I mean, I’m sure they’re fine and everything, but…. hang on a minute:

Here’s the POINT shapefile:

Initially looks ok, the shapefile contains 2 Point features – they’ve got the correct attribute fields, but both points seem to have been incorrectly placed at coordinates of POINT(0.0 2.0). Huh?

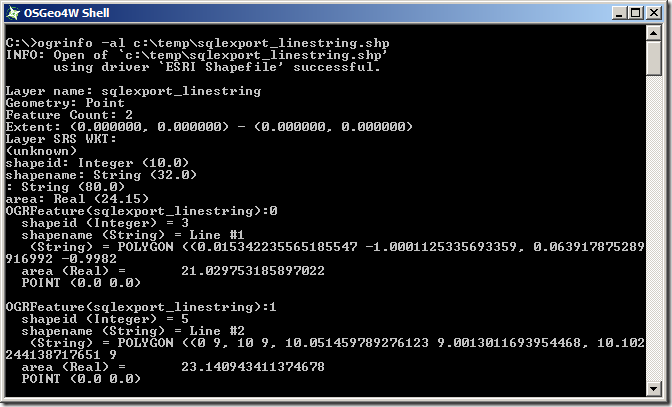

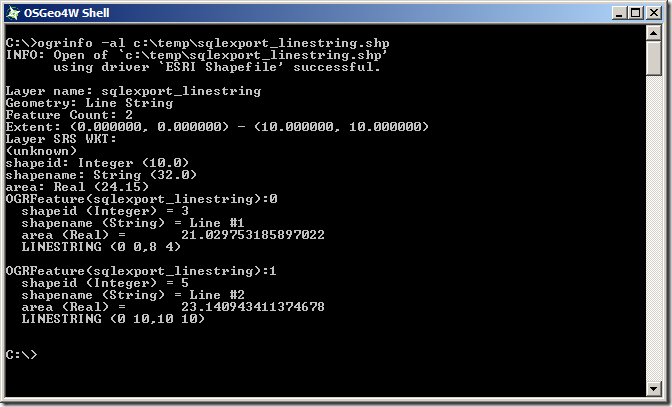

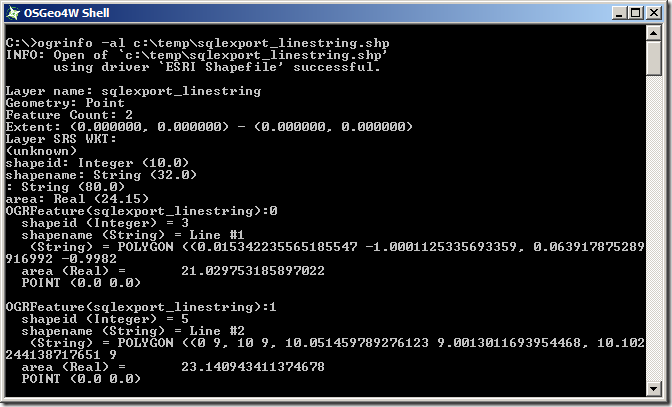

What about the LINESTRING shapefile:

Initially looks ok, the shapefile contains 2 Point features – they’ve got the correct attribute fields, but both points seem to have been incorrectly placed at coordinates of POINT(0.0 2.0). Huh?

What about the LINESTRING shapefile:

This is even worse – even though we specified that only LineString geometries should be returned from the SQL query, the created shapefile thinks it contains 2 Point features (Geometry: Point, near the top). And those points both lie at POINT (0.0 0.0)…

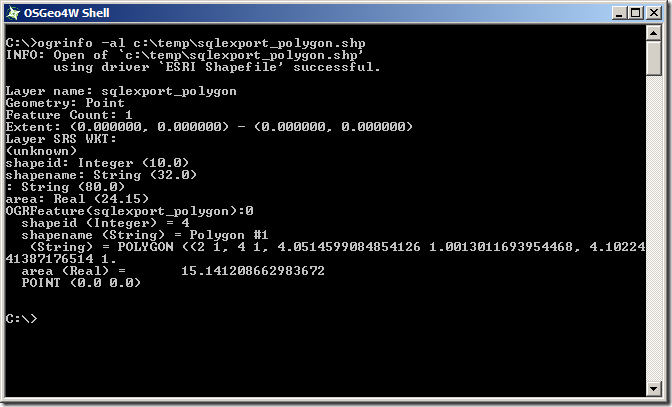

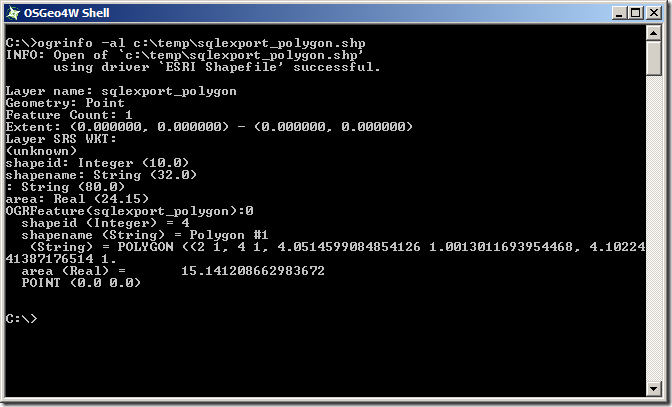

And the POLYGON shapefile:

This is even worse – even though we specified that only LineString geometries should be returned from the SQL query, the created shapefile thinks it contains 2 Point features (Geometry: Point, near the top). And those points both lie at POINT (0.0 0.0)…

And the POLYGON shapefile:

Same problem as the LineString – it’s effectively an empty Point shapefile.

The problem seems to be that, even though we’re only returning geometries of a certain type from the SQL query, we haven’t explicitly stated that to the shapefile creator, so OGR2OGR is creating three empty shapefiles first (each set up to receive the default geometry type of POINT), and then trying to populate them with unmatching shape types, leading to corrupt data. To explicitly state the geometry type of the shapefiles created, we need to supply the SHPT layer creation option for each shapefile as specified at http://www.gdal.org/ogr/drv_shapefile.html, by adding –lco “SHPT=POLYGON”, –lco “SHPT=ARC” (for LineStrings) etc. as follows:

Unfortunately, that brings us round to almost exactly the same error as we first started with when creating the LineString and Polygon shapefiles (Gah! I thought we’d got rid of them!):

ERROR 1: Attempt to write non-linestring (POINT) geometry to ARC type shapefile

ERROR 1: Attempt to write non-polygon (POINT) geometry to POLYGON type shapefile

We are only selecting geometries of the matching type for each shapefile by filtering the query based on STGeometryType(), so why does OGR2OGR think that we are selecting other types of geometries? What’s more, we haven’t yet explained why the point shapefile (which was, after all, being populated only with point geometries), incorrectly placed both points at coordinates POINT(0.0 2.0). It seems that something is corrupting the results of the SQL statement.

And here’s the “Ta-dah!” moment. According to http://www.gdal.org/ogr/drv_mssqlspatial.html, when retrieving spatial data from SQL Server, “The default [GeometryFormat] value is ‘native’, in this case the native SqlGeometry and SqlGeography serialization format is used”. However, this doesn’t actually appear to hold true. SQL Server stores geometry and geography data in a format very similar to, but slightly different from Well-Known Binary (WKB). The SQL Server binary values for the two points in the OGRExportTestTable are:

The Well-Known Binary of these two points is , instead, as follows:

As you can see, they’re very similar – the coordinate values are serialised as 8-byte floating point binary values in both cases, but the MSSQL Server native serialisation has a different and slightly longer (i.e. one byte more) header. Thus, if OGR2OGR is expecting to receive one type of data, but actually gets the other, all the bytes will be displaced slightly. This could explain both why OGR believed it was receiving the incorrect geometry types and also why the point coordinates were wrong.

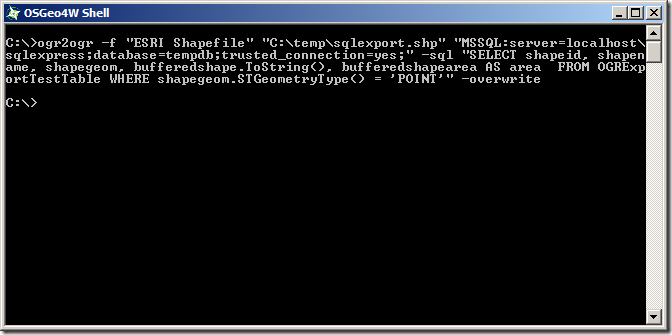

To correct this, rather than retrieving the shapegeom value directly, use the STAsBinary() method in the SQL statement to retrieve the WKB of the shapegeom column instead.

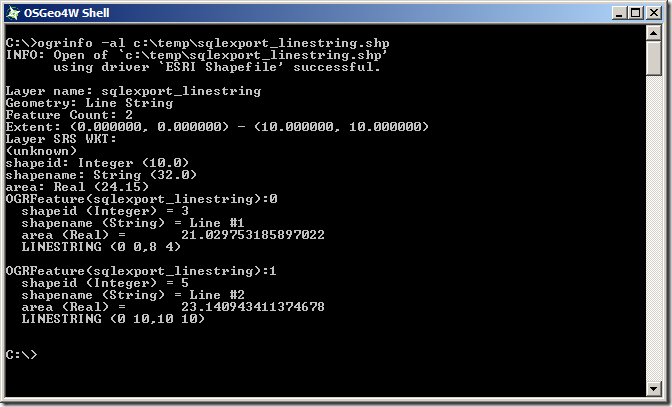

Right, we are definitely making progress now. Trying ogrinfo again reveals that all of the layers have the correct number of features, or the appropriate type, and all have the right coordinate values. Yay!

Same problem as the LineString – it’s effectively an empty Point shapefile.

The problem seems to be that, even though we’re only returning geometries of a certain type from the SQL query, we haven’t explicitly stated that to the shapefile creator, so OGR2OGR is creating three empty shapefiles first (each set up to receive the default geometry type of POINT), and then trying to populate them with unmatching shape types, leading to corrupt data. To explicitly state the geometry type of the shapefiles created, we need to supply the SHPT layer creation option for each shapefile as specified at http://www.gdal.org/ogr/drv_shapefile.html, by adding –lco “SHPT=POLYGON”, –lco “SHPT=ARC” (for LineStrings) etc. as follows:

Unfortunately, that brings us round to almost exactly the same error as we first started with when creating the LineString and Polygon shapefiles (Gah! I thought we’d got rid of them!):

ERROR 1: Attempt to write non-linestring (POINT) geometry to ARC type shapefile

ERROR 1: Attempt to write non-polygon (POINT) geometry to POLYGON type shapefile

We are only selecting geometries of the matching type for each shapefile by filtering the query based on STGeometryType(), so why does OGR2OGR think that we are selecting other types of geometries? What’s more, we haven’t yet explained why the point shapefile (which was, after all, being populated only with point geometries), incorrectly placed both points at coordinates POINT(0.0 2.0). It seems that something is corrupting the results of the SQL statement.

And here’s the “Ta-dah!” moment. According to http://www.gdal.org/ogr/drv_mssqlspatial.html, when retrieving spatial data from SQL Server, “The default [GeometryFormat] value is ‘native’, in this case the native SqlGeometry and SqlGeography serialization format is used”. However, this doesn’t actually appear to hold true. SQL Server stores geometry and geography data in a format very similar to, but slightly different from Well-Known Binary (WKB). The SQL Server binary values for the two points in the OGRExportTestTable are:

The Well-Known Binary of these two points is , instead, as follows:

As you can see, they’re very similar – the coordinate values are serialised as 8-byte floating point binary values in both cases, but the MSSQL Server native serialisation has a different and slightly longer (i.e. one byte more) header. Thus, if OGR2OGR is expecting to receive one type of data, but actually gets the other, all the bytes will be displaced slightly. This could explain both why OGR believed it was receiving the incorrect geometry types and also why the point coordinates were wrong.

To correct this, rather than retrieving the shapegeom value directly, use the STAsBinary() method in the SQL statement to retrieve the WKB of the shapegeom column instead.

Right, we are definitely making progress now. Trying ogrinfo again reveals that all of the layers have the correct number of features, or the appropriate type, and all have the right coordinate values. Yay!

There’s just one nagging thing and that’s the Layer SRS WKT: (unknown). When we converted from the SQL Server native serialisation to Well-Known Binary, we lost the metadata of the spatial reference identifier (SRID) associated with each geometry. This information contains the details of the datum, coordinate reference system, prime meridian etc. that make the coordinates of each geometry relate to an actual place on the earth’s surface. In the shapefile format, this information is contained in a .PRJ file that usually accompanies each .SHP file but, since we haven’t supplied this information to OGR2OGR, none of the created shapefiles currently have associated .PRJ files, so ogrinfo reports the spatial reference system as “unknown”.

To create a PRJ file, we need to append the –a_srs option, supplying the same EPSG id as was supplied when creating the original geometry instances in the table. (Of course, if you have instances of more than one SRID in the same SQL Server table, you’ll have to split these out into separate shapefiles, just as you have to split different geometry types into separate shapefiles)

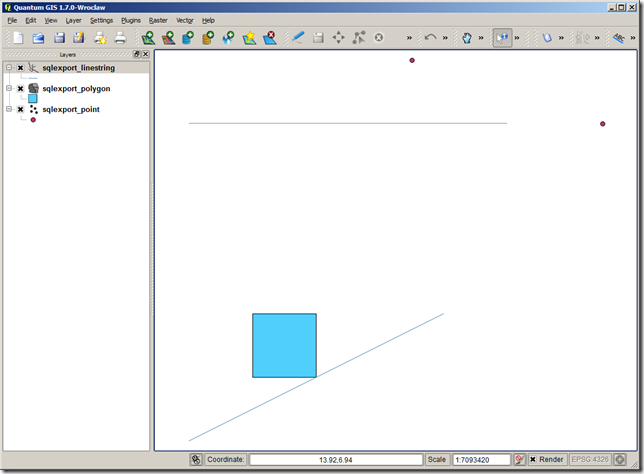

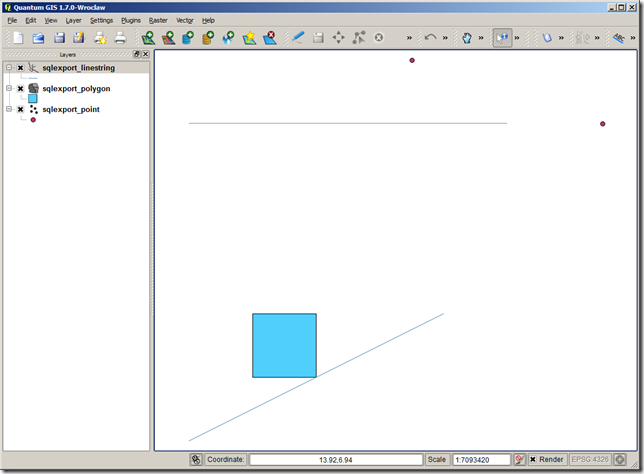

And here’s the resulting shapefile layers loaded into qGIS:

There’s just one nagging thing and that’s the Layer SRS WKT: (unknown). When we converted from the SQL Server native serialisation to Well-Known Binary, we lost the metadata of the spatial reference identifier (SRID) associated with each geometry. This information contains the details of the datum, coordinate reference system, prime meridian etc. that make the coordinates of each geometry relate to an actual place on the earth’s surface. In the shapefile format, this information is contained in a .PRJ file that usually accompanies each .SHP file but, since we haven’t supplied this information to OGR2OGR, none of the created shapefiles currently have associated .PRJ files, so ogrinfo reports the spatial reference system as “unknown”.

To create a PRJ file, we need to append the –a_srs option, supplying the same EPSG id as was supplied when creating the original geometry instances in the table. (Of course, if you have instances of more than one SRID in the same SQL Server table, you’ll have to split these out into separate shapefiles, just as you have to split different geometry types into separate shapefiles)

And here’s the resulting shapefile layers loaded into qGIS:

Setup

To start with, make sure you’ve got a copy of OGR2OGR version 1.8 or greater (earlier versions do not have the MSSQL driver installed). You can either build it from source supplied on the GDAL page or, for convenience, download and install pre-compiled windows binaries supplied as part of the OSgeo4W package. Now, let’s set up some test data in a SQL Server table that we want to export. To test the full range of OGR2OGR features (or should that say, “the full range of error messages you can create”?), I’m going to create a table that contains two different geometry columns – an original geometry and a buffered geometry, populated using a range of different geometry types:

CREATE TABLE OGRExportTestTable (

shapeid int identity(1,1),

shapename varchar(32),

shapegeom geometry,

bufferedshape AS shapegeom.STBuffer(1),

bufferedshapearea AS shapegeom.STBuffer(1).STArea()

);

INSERT INTO OGRExportTestTable (shapename, shapegeom) VALUES

('Point #1', geometry::STGeomFromText('POINT(13 10)', 2199)),

('Point #2', geometry::STGeomFromText('POINT(7 12)', 2199)),

('Line #1', geometry::STGeomFromText('LINESTRING(0 0, 8 4)', 2199)),

('Polygon #1', geometry::STGeomFromText('POLYGON((2 2, 4 2, 4 4, 2 4, 2 2))', 2199)),

('Line #2', geometry::STGeomFromText('LINESTRING(0 10, 10 10)', 2199));

Exporting from SQL Server to Shapefile with OGR2OGR (via a string of errors along the way)

The basic pattern for OGR2OGR usage is given at http://www.gdal.org/ogr2ogr.html, with additional usage options for the SQL Server driver at http://www.gdal.org/ogr/drv_mssqlspatial.html. So, let’s start by just trying out a basic example to export the entire OGRExportTestTable from my local SQLExpress instance to a shapefile at c:tempsqlexport.shp, as follows: (change the connection string to match your server/credentials as appropriate)ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;tables=OGRExportTestTable;trusted_connection=yes;"

ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;trusted_connection=yes;" -sql "SELECT * FROM OGRExportTestTable WHERE shapegeom.STGeometryType() = 'POINT'"

ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;trusted_connection=yes;" -sql "SELECT * FROM OGRExportTestTable WHERE shapegeom.STGeometryType() = 'POINT'" -overwrite

ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;trusted_connection=yes;" -sql "SELECT shapeid, shapename, shapegeom, bufferedshape.ToString(), bufferedshapearea FROM OGRExportTestTable WHERE shapegeom.STGeometryType() = 'POINT'" -overwrite

ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;trusted_connection=yes;" -sql "SELECT shapeid, shapename, shapegeom, bufferedshape.ToString(), bufferedshapearea AS area FROM OGRExportTestTable WHERE shapegeom.STGeometryType() = 'POINT'" -overwrite

So, now, just repeat the above but substituting the POINT, LINESTRING, and POLYGON geometry types, and creating three separate corresponding shapefiles:

So, now, just repeat the above but substituting the POINT, LINESTRING, and POLYGON geometry types, and creating three separate corresponding shapefiles:

ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport_point.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;trusted_connection=yes;" -sql "SELECT shapeid, shapename, shapegeom, bufferedshape.ToString(), bufferedshapearea AS area FROM OGRExportTestTable WHERE shapegeom.STGeometryType() = 'POINT'" -overwrite ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport_linestring.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;trusted_connection=yes;" -sql "SELECT shapeid, shapename, shapegeom, bufferedshape.ToString(), bufferedshapearea AS area FROM OGRExportTestTable WHERE shapegeom.STGeometryType() = 'LINESTRING'" -overwrite ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport_polygon.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;trusted_connection=yes;" -sql "SELECT shapeid, shapename, shapegeom, bufferedshape.ToString(), bufferedshapearea AS area FROM OGRExportTestTable WHERE shapegeom.STGeometryType() = 'POLYGON'" -overwrite

ogrinfo -al c:tempsqlexport_point.shp

Initially looks ok, the shapefile contains 2 Point features – they’ve got the correct attribute fields, but both points seem to have been incorrectly placed at coordinates of POINT(0.0 2.0). Huh?

What about the LINESTRING shapefile:

Initially looks ok, the shapefile contains 2 Point features – they’ve got the correct attribute fields, but both points seem to have been incorrectly placed at coordinates of POINT(0.0 2.0). Huh?

What about the LINESTRING shapefile:

This is even worse – even though we specified that only LineString geometries should be returned from the SQL query, the created shapefile thinks it contains 2 Point features (Geometry: Point, near the top). And those points both lie at POINT (0.0 0.0)…

And the POLYGON shapefile:

This is even worse – even though we specified that only LineString geometries should be returned from the SQL query, the created shapefile thinks it contains 2 Point features (Geometry: Point, near the top). And those points both lie at POINT (0.0 0.0)…

And the POLYGON shapefile:

Same problem as the LineString – it’s effectively an empty Point shapefile.

The problem seems to be that, even though we’re only returning geometries of a certain type from the SQL query, we haven’t explicitly stated that to the shapefile creator, so OGR2OGR is creating three empty shapefiles first (each set up to receive the default geometry type of POINT), and then trying to populate them with unmatching shape types, leading to corrupt data. To explicitly state the geometry type of the shapefiles created, we need to supply the SHPT layer creation option for each shapefile as specified at http://www.gdal.org/ogr/drv_shapefile.html, by adding –lco “SHPT=POLYGON”, –lco “SHPT=ARC” (for LineStrings) etc. as follows:

Same problem as the LineString – it’s effectively an empty Point shapefile.

The problem seems to be that, even though we’re only returning geometries of a certain type from the SQL query, we haven’t explicitly stated that to the shapefile creator, so OGR2OGR is creating three empty shapefiles first (each set up to receive the default geometry type of POINT), and then trying to populate them with unmatching shape types, leading to corrupt data. To explicitly state the geometry type of the shapefiles created, we need to supply the SHPT layer creation option for each shapefile as specified at http://www.gdal.org/ogr/drv_shapefile.html, by adding –lco “SHPT=POLYGON”, –lco “SHPT=ARC” (for LineStrings) etc. as follows:

ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport_point.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;trusted_connection=yes;" -sql "SELECT shapeid, shapename, shapegeom, bufferedshape.ToString(), bufferedshapearea AS area FROM OGRExportTestTable WHERE shapegeom.STGeometryType() = 'POINT'" -overwrite -lco "SHPT=POINT" ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport_linestring.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;trusted_connection=yes;" -sql "SELECT shapeid, shapename, shapegeom, bufferedshape.ToString(), bufferedshapearea AS area FROM OGRExportTestTable WHERE shapegeom.STGeometryType() = 'LINESTRING'" -overwrite -lco "SHPT=ARC" ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport_polygon.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;trusted_connection=yes;" -sql "SELECT shapeid, shapename, shapegeom, bufferedshape.ToString(), bufferedshapearea AS area FROM OGRExportTestTable WHERE shapegeom.STGeometryType() = 'POLYGON'" -overwrite -lco "SHPT=POLYGON"

0x00000000010C0000000000002A400000000000002440 0x00000000010C0000000000001C400000000000002840

0x01010000000000000000002A400000000000002440 0x01010000000000000000001C400000000000002840

ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport_point.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;trusted_connection=yes;" -sql "SELECT shapeid, shapename, shapegeom.STAsBinary(), bufferedshape.ToString(), bufferedshapearea AS area FROM OGRExportTestTable WHERE shapegeom.STGeometryType() = 'POINT'" -overwrite -lco "SHPT=POINT" ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport_linestring.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;trusted_connection=yes;" -sql "SELECT shapeid, shapename, shapegeom.STAsBinary(), bufferedshape.ToString(), bufferedshapearea AS area FROM OGRExportTestTable WHERE shapegeom.STGeometryType() = 'LINESTRING'" -overwrite -lco "SHPT=ARC" ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport_polygon.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;trusted_connection=yes;" -sql "SELECT shapeid, shapename, shapegeom.STAsBinary(), bufferedshape.ToString(), bufferedshapearea AS area FROM OGRExportTestTable WHERE shapegeom.STGeometryType() = 'POLYGON'" -overwrite -lco "SHPT=POLYGON"

There’s just one nagging thing and that’s the Layer SRS WKT: (unknown). When we converted from the SQL Server native serialisation to Well-Known Binary, we lost the metadata of the spatial reference identifier (SRID) associated with each geometry. This information contains the details of the datum, coordinate reference system, prime meridian etc. that make the coordinates of each geometry relate to an actual place on the earth’s surface. In the shapefile format, this information is contained in a .PRJ file that usually accompanies each .SHP file but, since we haven’t supplied this information to OGR2OGR, none of the created shapefiles currently have associated .PRJ files, so ogrinfo reports the spatial reference system as “unknown”.

To create a PRJ file, we need to append the –a_srs option, supplying the same EPSG id as was supplied when creating the original geometry instances in the table. (Of course, if you have instances of more than one SRID in the same SQL Server table, you’ll have to split these out into separate shapefiles, just as you have to split different geometry types into separate shapefiles)

There’s just one nagging thing and that’s the Layer SRS WKT: (unknown). When we converted from the SQL Server native serialisation to Well-Known Binary, we lost the metadata of the spatial reference identifier (SRID) associated with each geometry. This information contains the details of the datum, coordinate reference system, prime meridian etc. that make the coordinates of each geometry relate to an actual place on the earth’s surface. In the shapefile format, this information is contained in a .PRJ file that usually accompanies each .SHP file but, since we haven’t supplied this information to OGR2OGR, none of the created shapefiles currently have associated .PRJ files, so ogrinfo reports the spatial reference system as “unknown”.

To create a PRJ file, we need to append the –a_srs option, supplying the same EPSG id as was supplied when creating the original geometry instances in the table. (Of course, if you have instances of more than one SRID in the same SQL Server table, you’ll have to split these out into separate shapefiles, just as you have to split different geometry types into separate shapefiles)

The Final Product

So here’s the final script that will separate out points, linestrings, and polygons from a SQL Server table into separate shapefiles, via the WKB format but maintaining the SRID of the original instance, and retaining all other columns as attribute values in the .dbf file. Tested and confirmed to work with columns of either the geometry or geography datatype in SQL Server 2008/R2, or SQL Server Denali CTP3.ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport_point.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;trusted_connection=yes;" -sql "SELECT shapeid, shapename, shapegeom.STAsBinary(), bufferedshape.ToString(), bufferedshapearea AS area FROM OGRExportTestTable WHERE shapegeom.STGeometryType() = 'POINT'" -overwrite -lco "SHPT=POINT" -a_srs "EPSG:2199" ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport_linestring.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;trusted_connection=yes;" -sql "SELECT shapeid, shapename, shapegeom.STAsBinary(), bufferedshape.ToString(), bufferedshapearea AS area FROM OGRExportTestTable WHERE shapegeom.STGeometryType() = 'LINESTRING'" -overwrite -lco "SHPT=ARC" -a_srs "EPSG:2199" ogr2ogr -f "ESRI Shapefile" "C:tempsqlexport_polygon.shp" "MSSQL:server=localhostsqlexpress;database=tempdb;trusted_connection=yes;" -sql "SELECT shapeid, shapename, shapegeom.STAsBinary(), bufferedshape.ToString(), bufferedshapearea AS area FROM OGRExportTestTable WHERE shapegeom.STGeometryType() = 'POLYGON'" -overwrite -lco "SHPT=POLYGON" -a_srs "EPSG:2199"

Wednesday, November 16, 2011

The Street View Comparisons

I missed this impressive use of custom Street Views by the New York Times when it was published back in May. However it is so good that it is worth posting even this long after the event.

Panoramas of Joplin Before and After the Tornado uses Google Map Street Views of Joplin side by side with panoramas taken after the Joplin tornado hit.

A number of newspapers have created clever visualisations of the damage caused by natural disasters by using before and after satellite imagery. For example both ABC News and The NYT published comparisons of satellite imagery of Japan before and after this year's earthquake.

I missed this impressive use of custom Street Views by the New York Times when it was published back in May. However it is so good that it is worth posting even this long after the event.

Panoramas of Joplin Before and After the Tornado uses Google Map Street Views of Joplin side by side with panoramas taken after the Joplin tornado hit.

A number of newspapers have created clever visualisations of the damage caused by natural disasters by using before and after satellite imagery. For example both ABC News and The NYT published comparisons of satellite imagery of Japan before and after this year's earthquake.

The New York Times however are the first newspaper to create a before and after visualisation using Street View. The paper took a number of 360 degree photos of areas of Joplin after the May tornado. They then used the Custom Street View functionality in the Google Maps API to create Street Views from their panoramas.

The custom panoramas have been placed side-by-side with the Google Maps Street Views from the same location. As you pan the Street View the custom panorama moves to show exactly the same view, to show the devastation caused by the earthquake.

Via: Google Maps for Journalists

The New York Times however are the first newspaper to create a before and after visualisation using Street View. The paper took a number of 360 degree photos of areas of Joplin after the May tornado. They then used the Custom Street View functionality in the Google Maps API to create Street Views from their panoramas.

The custom panoramas have been placed side-by-side with the Google Maps Street Views from the same location. As you pan the Street View the custom panorama moves to show exactly the same view, to show the devastation caused by the earthquake.

Via: Google Maps for Journalists

Monday, November 14, 2011

The SHOGUN machine learning toolbox

Google Summer of Code 2011 gave a big boost to the development of the SHOGUN machine learning toolbox. In case you have never heard of SHOGUN or machine learning, machine learning involves algorithms that do ‘intelligent’ and even automatic data processing and is currently used in many different settings. You will find machine learning in the face detection in your camera, compressing the speech in your mobile phone, and powering the recommendations in your favorite online shop, as well as predicting the solubility of molecules in water and the location of genes in humans, to name just a few examples. Interested? Shogun can help you give it a try.

SHOGUN is a machine learning toolbox, which is designed for unified large-scale learning for a broad range of feature types and learning settings. It offers a considerable number of machine learning models such as support vector machines for classification and regression, hidden Markov models, multiple kernel learning, linear discriminant analysis, linear programming machines, and perceptrons. Most of the specific algorithms are able to deal with several different data classes, including dense and sparse vectors and sequences using floating point or discrete data types. We have used this toolbox in several applications from computational biology, some of them coming with no less than 10 million training examples and others with 7 billion test examples. With more than a thousand installations worldwide, SHOGUN is already widely adopted in the machine learning community and beyond.

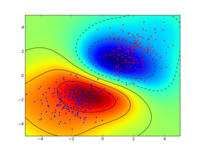

Some very simple examples stemming from a sub-branch of machine learning called supervised learning illustrate how objects represented by two-dimensional vectors can be classified into good or bad, by learning a support vector machine. I would suggest installing the python_modular interface of SHOGUN and to run the example interactive_svm_demo.py also included in the source tarball. Two images illustrating the training of a support vector machine follow:

We were a first time organization this year, i.e. taking part in our first Google Summer of Code. Having received many student applications, we were very happy to hear that we were given 5 very talented students but we had to reject about 60 students (only 7% acceptance rate). Deciding which 5 students we would accept was an extremely tough decision for us. So in the end we raised the bar by requiring sample contributions even before the actual Google Summer of Code started. The quality of the contributions and independence of the student aided our decision on the selection of the final five students.

At the end of the summer we now have a new core developer and various new features implemented in SHOGUN: Interfaces to new languages like Java, C#, Ruby, and Lua, a model selection framework, many dimension reduction techniques, Gaussian Mixture Model estimation and a full-fledged online learning framework. All of this work has already been integrated in the newly released shogun 1.0.0. To find out more about the newly implemented features read below.

Interfaces to the Java, C#, Lua and Ruby Programming Languages

Baozeng

Boazeng implemented swig-typemaps that enable transfer of objects native to the language one wants to interface to. In his project he added support for Java, Ruby, C# and Lua. His knowledge about Swig helped us to drastically simplify shogun's typemaps for existing languages like Octave and Python, resolving other corner-case type issues. In addition, the typemaps bring a high-performance and versatile machine learning toolbox to these languages. It should be noted that shogun objects trained in e.g. Python can be serialized to disk and then loaded from any other language like Lua or Java. We hope this helps users working in multiple-language environments. Note that the syntax is very similar across all languages used, compare for yourself, various examples for all languages (Python, Octave, Java, Lua, Ruby, and C#) are available.

Cross-Validation Framework

Heiko Strathmann

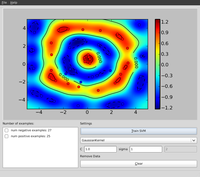

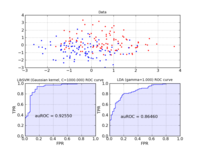

Nearly every learning machine has parameters which have to be determined manually. Before Heiko started his project, one had to manually implement cross-validation using (nested) for-loops. In his highly involved project Heiko extended shogun's core to register parameters and ultimately made cross-validation possible. He implemented different model selection schemes (train, validation, test split, n-fold cross-validation, stratified cross-validation, etc.) and created some examples for illustration. Note that various performance measures are available to measure how “good” a model is. The figure below shows the area under the receiver operator characteristic curve as an example.

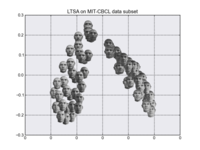

Dimension Reduction Techniques

Sergey Lisitsyn

Dimensionality reduction is the process of finding a low-dimensional representation of a high-dimensional one while maintaining the core essence of the data. For one of the most important practical issues of applied machine learning, it is widely used for preprocessing real data. With a strong focus on memory requirements and speed, Sergey implemented the following dimension reduction techniques:

- Locally Linear Embedding

- Kernel Locally Linear Embedding

- Local Tangent Space Alignment

- Multidimensional scaling (with capability of landmark approximation)

- Isomap

- Hessian Locally Linear Embedding

- Laplacian Eigenmaps

Expectation Maximization Algorithms for Gaussian Mixture Models

Alesis Novik

The Expectation-Maximization algorithm is well known in the machine learning community. The goal of this project was the robust implementation of the Expectation-Maximization algorithm for Gaussian Mixture Models. Several computational tricks have been applied to address numerical and stability issues, like:

Representing covariance matrices as their SVD

Doing operations in log domain to avoid overflow/underflow

Setting minimum variances to avoid singular Gaussians

Merging/splitting of Gaussians.

An illustrative example of estimating a one and two-dimensional Gaussian follows below.

Large Scale Learning Framework and Integration of Vowpal Wabbit

Shashwat Lal Das

Shashwat introduced support for 'streaming' features into shogun. That is, instead of shogun's traditional way of requiring all data to be in memory, features can now be streamed from a disk, enabling the use of massively big data sets. He implemented support for dense and sparse vector based input streams as well as strings and converted existing online learning methods to use this framework. He was particularly careful and even made it possible to emulate streaming from in-memory features. He finally integrated (parts of) Vowpal Wabbit, which is a very fast large scale online learning algorithm based on SGD.

Saturday, November 12, 2011

Google+ Pages

In life we connect with all kinds of people, places and things. There’s friends and family, of course, but there’s also the sports teams we root for, the coffee shops we’re loyal to, and the TV shows we can’t stop watching (to name a few).

So far Google+ has focused on connecting people with other people. But we want to make sure you can build relationships with all the things you care about—from local businesses to global brands—so today we’re rolling out Google+ Pages worldwide.

People + pages, better together

Google+ has always been a place for real-life sharing, and Google+ Pages is no exception. After all: behind every page (or storefront, or four-door sedan) is a passionate group of individuals, and we think you should able to connect with them too.

For you and me, this means we can now hang out live with the local bike shop, or discuss our wardrobe with a favorite clothing line, or follow a band on tour. Google+ pages give life to everything we find in the real world. And by adding them to circles, we can create lasting bonds with the pages (and people) that matter most.

For businesses and brands, Google+ pages help you connect with the customers and fans who love you. Not only can they recommend you with a +1, or add you to a circle to listen long-term. They can actually spend time with your team, face-to-face-to-face. All you need to do is start sharing, and you'll soon find the super fans and loyal customers that want to say hello.

A number of pages are already available (see below), but any organization will soon be able to join the community at plus.google.com/pages/create.

* You can join Kermit and Ms. Piggy for a live Hangout on the Muppets’ Google+ page today at 4:30pm PT!

Direct Connect from Google search

People search on Google billions of times a day, and very often, they're looking for businesses and brands. Today's launch of Google+ Pages can help people transform their queries into meaningful connections, so we're rolling out two ways to add pages to circles from Google search. The first is by including Google+ pages in search results, and the second is a new feature called Direct Connect.

Maybe you're watching a movie trailer, or you just heard that your favorite band is coming to town. In both cases you want to connect with them right now, and Direct Connect makes it easy—even automatic. Just go to Google and search for [+], followed by the page you're interested in (like +Angry Birds). We'll take you to their Google+ page, and if you want, we’ll add them to your circles.

Direct Connect works for a limited number of pages today (like +Google, +Pepsi, and +Toyota), but many more are coming. In the meantime, organizations can learn more about Direct Connect in our Help Center.

With Google+, we strive to bring the nuance and richness of real-life sharing to software. Today’s initial launch of Google+ Pages brings us a little bit closer, but we’ve still got lots of improvements planned, and miles to go before we sleep.

Thursday, November 10, 2011

Wednesday, November 9, 2011

Rome Trip for Google Earth Community Members

Google recently invited some top contributors and volunteers for the Google Earth Community to a user summit. The Google Earth Community is an online discussion forum and meeting place to discuss content found or created for use in Google Earth. Over 1 million people are members of the forum from places all over the world. Since Google Earth is all about geography, and its volunteers and top contributors are from all over the world, it is only logical that Google have the summit in a unique and interesting geographic location.

This year, Google invited over 30 top community moderators and contributors to Rome, Italy. It was a chance for many of these people, who have been participants for years now, to have a chance to meet one another face to face. And, we got to meet a few of the Google Earth Google people as well. As part of the summit, future directions of the discussion forums and of Google Earth itself were discussed.

Google Earth Blog was invited to Rome as well. Although Mickey couldn't make it, Google was kind enough to bring me all the way from New Caledonia to Rome for the week-long event. My wife only allowed me to go if I brought her along, so we both made the long, but worthwhile, trip to the event.

Google also organized some tours of some of the top attractions in Rome. So, we got to see things like: the Colloseum, the Forum, the Vatican, Sistine Chapel, and St. Peter's Basilica. I also gave a short presentation on our travels with the Tahina Expedition and how we are using Google Earth to share our trip. You can read more about our trip (and see some photos) over at our Tahina Expedition blog.

Google recently updated the 3D buildings layer for Rome to add much more detail to this beautiful city. And, we got to hear from one of the Googlers behind the effort to convert the detailed 3D models of ancient rome which you can view in one of the Google Earth layers.

A big THANK YOU to Google for bringing some of the world's most enthusiastic Google Earth users together to such a wonderful place! You can read more about this in the recent post at Google LatLong. You can also read about an earlier similar meeting held in Washington, DC in 2007.

Sunday, November 6, 2011

The New tools which publishers may use to maximize their revenue

These increasingly blurry lines are not only resulting in highly engaging forms of content for users, but many new revenue opportunities for publishers. A wave of innovation and investment over the past several years has also created better performing ads, a larger pool of online advertisers, and new technologies to sell and manage ad space. Together, these trends are helping to spur increased investment in online advertising. We’ve seen this in our own Google Display Network: our publisher partners have seen spending across the Google Display Network from our largest 1,000 advertisers more than double in the last 12 months.

With all these new opportunities in mind, we’re introducing new tools for our publisher partners—in our ad serving technology (DoubleClick for Publishers) and in our ad exchange (DoubleClick Ad Exchange).

Video and mobile in DoubleClick for Publishers

Given the changes in the media landscape, it’s not surprising that we’ve seen incredible growth for both mobile and video ad formats over the past year: the number of video ads on the Google Display Network has increased 350 percent in the past 12 months, while AdMob, our mobile network, has grown by more than 200 percent.

Before now, it’s been difficult for publishers to manage all their video and mobile ad space from a single ad server—the platform publishers use to schedule, measure and run the ads they’ve sold on their sites. To solve this challenge, we’re rolling out new tools in our latest version of DoubleClick for Publishers that enable publishers to better manage video and mobile inventory. Publishers will be able to manage all of the ads they’re running—across all of their webpages, videos and mobile devices—from a single dashboard, and see which formats and channels are performing best for them.

A handful of publishers have already begun using the video feature and it appears to be performing well for them: we’ve seen 55 percent month-over-month growth in video ad volume in the last quarter. In other words, publishers are now able not only to produce more video content, but to make more money from it as well.

Direct Deals on the DoubleClick Ad Exchange

Another way publishers make money is to sell their advertising via online exchanges, like the DoubleClick Ad Exchange, where they can offer their ad space to a wide pool of competing ad buyers. This has already proven to generate substantially more revenue for publishers, and as a result we’ve seen significant growth in the number of trades on our exchange (158 percent year over year).

However, publishers have told us that they’d also like the option of making some of their ad space available only to certain buyers at a certain price—similar to how an art dealer might want to offer a painting first to certain clients before giving it to an auction house to sell. So we’re introducing Direct Deals on the Doubleclick Ad Exchange, which gives publishers the ability to make these “first look” offers. For example, using Direct Deals, a news publisher could set aside all of the ad space on their sports page and offer it first to a select group of buyers at a specific price, and then if those buyers pass on the offer, automatically place that inventory into the Ad Exchange’s auction.

Looking back at that blog, news site and app, we’d like them to have one more thing in common—being able to advantage of new opportunities to grow their businesses even further. These new tools, together with the other solutions we’re continuing to develop, are designed to help businesses like them—and all our publisher partners—do just that, and get the most out of today’s advertising landscape.

Friday, November 4, 2011

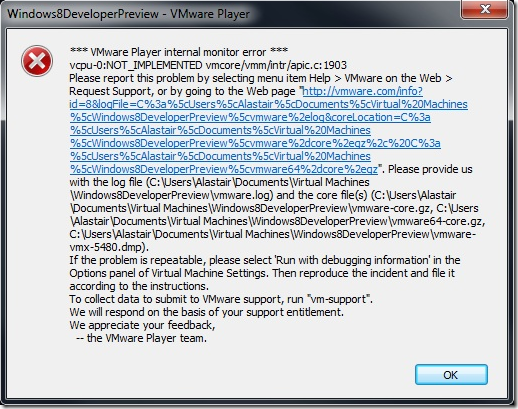

Windows 8: Preview for Developers